Improving compatibility of registry cache with GitLab Container Registry

This article was originally published on 11th October 2023. Some of its contents may not be relevant anymore.

This article was originally published on 11th October 2023. Some of its contents may not be relevant anymore.

Michał Zgliński

DevOps Engineer

2024-03-06

#Tech

Time to read

13 mins

In this article

Introduction

Registry Cache migration

How to fix the clean-up policies? The partial fix

One more thing about provenance attestation

Final build command that is as compatible as it gets at the time of writing and what we achieved

Custom garbage collect script

Summary

Share this article

In the ever-advancing realm of Docker & container technology, recent updates might help us better manage the container cache. This article delves deep into the dynamic world of container registry cache, shedding light on the latest developments that are reshaping the way we manage GitLab registry, clean up rules, and garbage collection.

With the recent move to buildkit as a default builder for docker, the registry cache became available. Take a sneak peek at how to use it:

docker build

1 2 3 4 5 6 7 8docker buildx build \ --target app \ --cache-from=type=registry,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:main \ --cache-from=type=registry,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:feature \ --cache-to=type=registry,mode=max,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:feature \ --tag ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:feature \ --push \ .

With this example, we are looking for two possible cache images main which is the latest cache from our default branch. The feature is our current branch slug, we can use it if it exists (we ran this command before). This command replaces a set of other commands that were required before to build images using cache

docker build

1 2 3 4 5 6 7 8 9docker pull ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:main docker pull ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:feature || true docker build \ --target app \ --cache-from ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:main \ --cache-from ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:feature \ --tag ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:feature \ . docker push ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:feature

Note: This is a setup for the old build engine, to make it work with buildkit, you need to add

--loador--pushto save the built image somewhere. What is more,--build-arg BUILDKIT_INLINE_CACHE=1needs to be added to the build command, because after the switch to buildkit the build command will not save the cache anywhere, except locally, which is not ideal in the CI environment.

And the discrepancy only becomes more obvious with multi-target Dockerfiles. With the old approach, you had to pull an image for every target in your Dockerfile (and push it to the registry as well), now, with the registry cache, it all resides within a single image, thanks to mode=max argument. Please check the description here.

Benefits of registry cache vs inline cache:

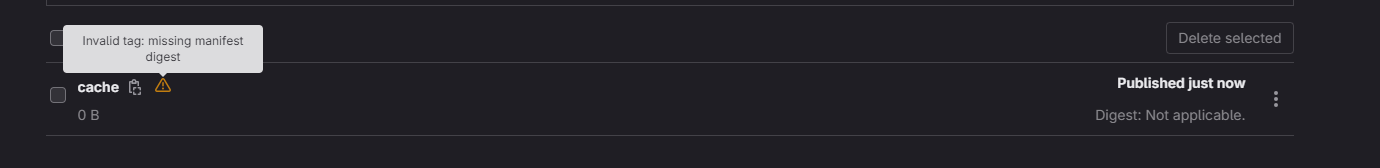

The only drawback that I could find, was the compatibility with GitLab Container Registry. Cache images are shown as broken in the UI (and the API call to get the details of this tag returns a 404 error)

This makes the cleanup policies not work properly.

Request a free Kubernetes consultation

Facing Kubernetes challenges? Contact us for a free consultation in just 1 step!

A detailed breakdown of the issue can be found here.

TLDR: The main reason for the broken compatibility of cache images is the use of an image index instead of an image manifest.

Example (from the GitHub ticket): for example, instead of this index:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21{ "schemaVersion": 2, "mediaType": "application/vnd.oci.image.index.v1+json", "manifests": [ { "mediaType": "application/vnd.oci.image.layer.v1.tar+gzip", "digest": "sha256:136482bf81d1fa351b424ebb8c7e34d15f2c5ed3fc0b66b544b8312bda3d52d9", "size": 2427917, "annotations": { "buildkit/createdat": "2021-07-02T17:08:09.095229615Z", "containerd.io/uncompressed": "sha256:3461705ddf3646694bec0ac1cc70d5d39631ca2f4317b451c01d0f0fd0580c90" } }, ... { "mediaType": "application/vnd.buildkit.cacheconfig.v0", "digest": "sha256:7aa23086ec6b7e0603a4dc72773ee13acf22cdd760613076ea53b1028d7a22d8", "size": 1654 } ] }

We could have the following manifest:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20{ "schemaVersion": 2, "config": { "mediaType": "application/vnd.buildkit.cacheconfig.v0", "size": 1654, "digest": "sha256:7aa23086ec6b7e0603a4dc72773ee13acf22cdd760613076ea53b1028d7a22d8" }, "layers": [ { "mediaType": "application/vnd.oci.image.layer.v1.tar+gzip", "digest": "sha256:136482bf81d1fa351b424ebb8c7e34d15f2c5ed3fc0b66b544b8312bda3d52d9", "size": 2427917, "annotations": { "buildkit/createdat": "2021-07-02T17:08:09.095229615Z", "containerd.io/uncompressed": "sha256:3461705ddf3646694bec0ac1cc70d5d39631ca2f4317b451c01d0f0fd0580c90" } }, ... ] }

Thankfully, a flag was implemented, that allows switching between an index and a manifest.

image-manifest=<true|false>: whether to export cache manifest as an OCI-compatible image manifest rather than a manifest list/index (default: false, must be used with oci-mediatypes=true)

Example code:

docker build

1 2 3 4 5 6 7 8docker buildx build \ --target app \ --cache-from=type=registry,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:main \ --cache-from=type=registry,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:feature \ --cache-to=type=registry,mode=max,image-manifest=true,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:feature \ --tag ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:feature \ --push \ .

Note:

image-manifest=truean export option has been officially available since buildkit v0.12.0, however, some v0.11.6 versions also include it. The best way, that I found, to check if you have it, is just to try to use it.

Results:

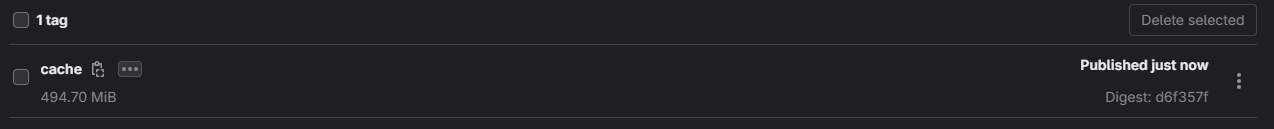

Now the compatibility is much better. In the UI we no longer see a warning symbol, we can see the size of the cache, but one issue remains, something that might not be obvious at first glance. If we look into the API response for this tag, we will notice that the created_at attribute is empty. Unfortunately, that means that these images will never be cleaned by cleanup policy, since it can remove only images that are 7 or more days old.

api response

1 2 3 4 5 6 7 8 9 10{ "name": "cache", "path": "*******/docker-regsitry-test/cra-test:cache", "location": "registry.gitlab.com/*******/docker-regsitry-test/cra-test:cache", "revision": "b9129182721421bdbe20646a6bc3072d1d6d39f7e503b1c74cebb6fd047f7056", "short_revision": "b91291827", "digest": "sha256:d6f357fb4ca5de37862c5dcfc8e6e5236a71d86505cba077c6cfe4730050fdf0", "created_at": null, "total_size": 518727681 }

If you are reading this article because you are experiencing similar issues, you might have also noticed a problem with regular images in your GitLab registry. They might look to be broken in the same way as the cache images. This is most likely caused by SLSA Provenance that Docker Desktop as of v4.16 and docker buildx as of v0.10.0 default to using SLSA Provenance for multi-architecture builds. At the moment of writing, GitLab Container Registry does not support that, hence you can follow the issue here and here.

The current fix for that is to add --provenance=false flag to the build command, or set BUILDX_NO_DEFAULT_ATTESTATIONS=1 as an environment variable.

docker build

1 2 3 4 5 6 7 8 9docker buildx build \ --target app \ --cache-from=type=registry,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:main \ --cache-from=type=registry,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:feature \ --cache-to=type=registry,mode=max,image-manifest=true,ref=${CI_REGISTRY}/${CI_PROJECT_PATH}/app.cache:feature \ --tag ${CI_REGISTRY}/${CI_PROJECT_PATH}/app:feature \ --provenance=off \ --push \ .

What we achieved:

What is left to do:

To tackle this problem, I've created a Python script, that iterates over the repositories of container registries in projects it has access to. Each tag is processed with the following algorithm:

latest, master, main, and tags that match v.*\..*\..* are always left untouched

tags are deleted under the following conditions:

created_at is empty, the registry name ends with .cache, and there is no counterpart registry without the .cache suffix.This script of course works on some assumptions, that is:

latest, master, main, and tags that match v.*\..*\..* are considered stable and should never be removed, even if corruptedNaturally, if your registry setup is a bit different, the script can be adjusted and I will explain how and where below.

First, let's bootstrap the main function, in which we will get the required parameters from the command line:

gitlab-url - the base URL of the gitlab instancetoken - PAT that the script will use to authenticate.dry-run - optional - can be used for testing/seeing what the script will doLater we will write down our main loop logic, that loop will iterate over all projects the user has access to, if a project has container registry enabled it will iterate over all registries within that project and lastly process every tag within those registries.

main

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31import argparse def main(): parser = argparse.ArgumentParser(description="Script to process GitLab registry tags.") parser.add_argument('--gitlab-url', required=True, help='GitLab URL.') parser.add_argument('--token', required=True, help='Authentication token.') parser.add_argument('--dry-run', action='store_true', help='If present, only print tags that should be deleted without actually deleting them.') args = parser.parse_args() global DRY_RUN, GITLAB_URL, HEADERS DRY_RUN = args.dry_run GITLAB_URL = args.gitlab_url HEADERS = { "Private-Token": args.token } projects = get_all_projects() for project in projects: if not project['container_registry_enabled']: continue registries = get_project_registries(project['id']) for registry in registries: tags = get_registry_tags(project['id'], registry['id']) for tag in tags: process_tag(project, registry, tag) if __name__ == "__main__": main()

Now let's write those data-gathering functions:

data gathering

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31import requests def get_all_projects(): return get_paginated_data("/api/v4/projects?order_by=id&sort=asc") def get_project_registries(project_id): return get_paginated_data(f"/api/v4/projects/{project_id}/registry/repositories?") def get_registry_tags(project_id, registry_id): return get_paginated_data(f"/api/v4/projects/{project_id}/registry/repositories/{registry_id}/tags?") def get_tag_details(project_id, registry_id, tag_name): response = requests.get(f"{GITLAB_URL}/api/v4/projects/{project_id}/registry/repositories/{registry_id}/tags/{tag_name}", headers=HEADERS) return response def get_paginated_data(endpoint): page = 1 items = [] while True: url = f"{GITLAB_URL}{endpoint}&per_page=100&page={page}" response = requests.get(url, headers=HEADERS) if response.status_code == 200: data = response.json() if not data: break items.extend(data) page += 1 else: print(f"Failed to retrieve data from {url}. Status code: {response.status_code}. Payload: {response.json()}") break return items

Since we now have all the data that we need to process the tag, we can now implement the process_tag function. If you wish to make any changes to the cleanup logic, reason_and_delete_decision function is the best place to do so. I've added extra comments explaining in detail what is happening.

tag processing

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50import re def process_tag(project, registry, tag): should_delete, reason = reason_and_delete_decision(project, registry, tag) if should_delete: if DRY_RUN: print(f"Tag '{tag['name']}' in Project: '{project['name']}', Registry: '{registry['name']}' should be deleted! - Reason: {reason}") else: print(f"Deleting tag '{tag['name']}' in Project: '{project['name']}', Registry: '{registry['name']}' - Reason: {reason}..", end=" ") delete_tag(project['id'], registry['id'], tag['name']) else: print(f"Tag '{tag['name']}' in Project: '{project['name']}', Registry: '{registry['name']}' - {reason}") def reason_and_delete_decision(project, registry, tag): tag_name = tag['name'] # if the tag name is something that we consider stable we should leave it be, you can change the condition here to suit your needs if tag_name in ["master", "main", "latest"] or re.match(r"v.*\..*\..*", tag_name): return False, f"Tag '{tag_name}' is a stable tag." # this is the first option in the above algorithm, it covers images with provenance attestation and cache images using manifest index response = get_tag_details(project['id'], registry['id'], tag_name) if response.status_code == 404: return True, f"Details of tag '{tag_name}' do not exist." # if we are dealing with the cache registry and the tag created_at is empty, than we are dealing with partially compatible cache image if not tag.get('created_at') and registry['name'].endswith('.cache'): counterpart_registry_name = registry['name'].removesuffix('.cache') # this looks for the couterpart registry without .cache suffix, there are only two possible results for this operation # - empty array is the counterpart registry does not exist # - an array with one element in it, the counterpart registry object counterpart_registries = [r for r in get_project_registries(project['id']) if r['name'] == counterpart_registry_name] # if the counterpart registry does not exists if not counterpart_registries: return True, f"Counterpart registry for '{registry['name']}' does not exist." counterpart_tags = get_registry_tags(project['id'], counterpart_registries[0]['id']) # if the counterpart image is missing, it probably got deleted by gitlab built-in cleanup so we can remove the corresponding cache image if not any(t['name'] == tag_name for t in counterpart_tags): return True, f"Counterpart tag for '{tag_name}' in registry '{counterpart_registry_name}' does not exist." return False, "LGTM!" def delete_tag(project_id, registry_id, tag_name): response = requests.delete(f"{GITLAB_URL}/api/v4/projects/{project_id}/registry/repositories/{registry_id}/tags/{tag_name}", headers=HEADERS) if response.status_code == 200: print(f"Tag '{tag_name}' has been deleted!") else: print(f"Failed to delete tag '{tag_name}'. Status code: {response.status_code}. Payload: {response.json()}")

That's it, we can now put it all together, I will also add a statistical summary at the end of the script execution

registry-gc.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145import requests import re import argparse import signal def main(): # Register signal handlers signal.signal(signal.SIGINT, print_counters) signal.signal(signal.SIGTERM, print_counters) parser = argparse.ArgumentParser(description="Script to process GitLab registry tags.") parser.add_argument('--gitlab-url', required=True, help='GitLab URL.') parser.add_argument('--token', required=True, help='Authentication token.') parser.add_argument('--dry-run', action='store_true', help='If present, only print tags that should be deleted without actually deleting them.') args = parser.parse_args() global DRY_RUN, GITLAB_URL, HEADERS DRY_RUN = args.dry_run GITLAB_URL = args.gitlab_url HEADERS = { "Private-Token": args.token } # Initialize counters global project_count, registry_count, tag_count, deleted_tag_count project_count = 0 registry_count = 0 tag_count = 0 deleted_tag_count = 0 projects = get_all_projects() for project in projects: if not project['container_registry_enabled']: continue project_count += 1 registries = get_project_registries(project['id']) for registry in registries: registry_count += 1 tags = get_registry_tags(project['id'], registry['id']) for tag in tags: process_tag(project, registry, tag) print_counters() ## Data gathering def get_all_projects(): return get_paginated_data("/api/v4/projects?order_by=id&sort=asc") def get_project_registries(project_id): return get_paginated_data(f"/api/v4/projects/{project_id}/registry/repositories?") def get_registry_tags(project_id, registry_id): return get_paginated_data(f"/api/v4/projects/{project_id}/registry/repositories/{registry_id}/tags?") def get_tag_details(project_id, registry_id, tag_name): response = requests.get(f"{GITLAB_URL}/api/v4/projects/{project_id}/registry/repositories/{registry_id}/tags/{tag_name}", headers=HEADERS) return response def get_paginated_data(endpoint): page = 1 items = [] while True: url = f"{GITLAB_URL}{endpoint}&per_page=100&page={page}" response = requests.get(url, headers=HEADERS) if response.status_code == 200: data = response.json() if not data: break items.extend(data) page += 1 else: print(f"Failed to retrieve data from {url}. Status code: {response.status_code}. Payload: {response.json()}") break return items # Tag processing def process_tag(project, registry, tag): global tag_count, deleted_tag_count tag_count += 1 should_delete, reason = reason_and_delete_decision(project, registry, tag) if should_delete: deleted_tag_count += 1 if DRY_RUN: print(f"Tag '{tag['name']}' in Project: '{project['name']}', Registry: '{registry['name']}' should be deleted! - Reason: {reason}") else: print(f"Deleting tag '{tag['name']}' in Project: '{project['name']}', Registry: '{registry['name']}' - Reason: {reason}..", end=" ") delete_tag(project['id'], registry['id'], tag['name']) else: print(f"Tag '{tag['name']}' in Project: '{project['name']}', Registry: '{registry['name']}' - {reason}") def reason_and_delete_decision(project, registry, tag): tag_name = tag['name'] if tag_name in ["master", "main", "latest"] or re.match(r"v.*\..*\..*", tag_name): return False, f"Tag '{tag_name}' is a stable tag." response = get_tag_details(project['id'], registry['id'], tag_name) if response.status_code == 404: return True, f"Details of tag '{tag_name}' do not exist." if not tag.get('created_at') and registry['name'].endswith('.cache'): counterpart_registry_name = registry['name'].removesuffix('.cache') counterpart_registries = [r for r in get_project_registries(project['id']) if r['name'] == counterpart_registry_name] if not counterpart_registries: return True, f"Counterpart registry for '{registry['name']}' does not exist." counterpart_tags = get_registry_tags(project['id'], counterpart_registries[0]['id']) if not any(t['name'] == tag_name for t in counterpart_tags): return True, f"Counterpart tag for '{tag_name}' in registry '{counterpart_registry_name}' does not exist." return False, "LGTM!" def delete_tag(project_id, registry_id, tag_name): response = requests.delete(f"{GITLAB_URL}/api/v4/projects/{project_id}/registry/repositories/{registry_id}/tags/{tag_name}", headers=HEADERS) if response.status_code == 200: print(f"Tag '{tag_name}' has been deleted!") else: print(f"Failed to delete tag '{tag_name}'. Status code: {response.status_code}. Payload: {response.json()}") # Statistics def print_counters(signum=None, frame=None): # Print the counters print("\nSummary:") print(f"Number of projects processed: {project_count}") print(f"Number of registries processed: {registry_count}") print(f"Number of tags processed: {tag_count}") if DRY_RUN: print(f"Number of tags marked for deletion: {deleted_tag_count}") else: print(f"Number of tags deleted: {deleted_tag_count}") if signum: exit(1) ##### if __name__ == "__main__": main()

Lastly, you might want to run this script on a schedule, to do so you can use this .gitlab-ci.yml configuration and GitLab Pipeline Schedules, make sure to pass API_TOKEN variable using GitLab Variables

.gitlab-ci.yml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31stages: - run .base garbage collect: stage: run image: python:3-alpine timeout: 12 hours interruptible: true before_script: - pip install requests artifacts: when: always paths: - gc.log expire_in: 30 days garbage collect: extends: .base garbage collect only: variables: - $CI_DEFAULT_BRANCH == $CI_COMMIT_REF_NAME script: - python3 registry-gc.py --gitlab-url "$CI_SERVER_PROTOCOL://$CI_SERVER_HOST:$CI_SERVER_PORT" --token $API_TOKEN 2>&1 | tee gc.log dry garbage collect: extends: .base garbage collect only: refs: - merge_requests script: - python3 registry-gc.py --gitlab-url "$CI_SERVER_PROTOCOL://$CI_SERVER_HOST:$CI_SERVER_PORT" --token $API_TOKEN --dry-run 2>&1 | tee gc.log

With the above script, I managed to delete 85% of tags from a single namespace, containing a mid-sized microservice project. This amounted to a little bit over 4.5TB of data removed from the S3 storage backend, used for that instance of GitLab.

If you have any doubts, reach us out! We will help you tackle the obstacles on your way. Trust an experienced software development company.

Michał Zgliński

DevOps Engineer

Share this post

Want to light up your ideas with us?

Kickstart your new project with us in just 1 step!

Prefer to call or write a traditional e-mail?

Dev and Deliver

sp. z o.o. sp. k.

Address

Józefitów 8

30-039 Cracow, Poland

VAT EU

PL9452214307

Regon

368739409

KRS

94552994

Our services

Proud Member of